Results

PIA Days

PIA days are organized every semester to provide an opportunity for PIA students to present their work.

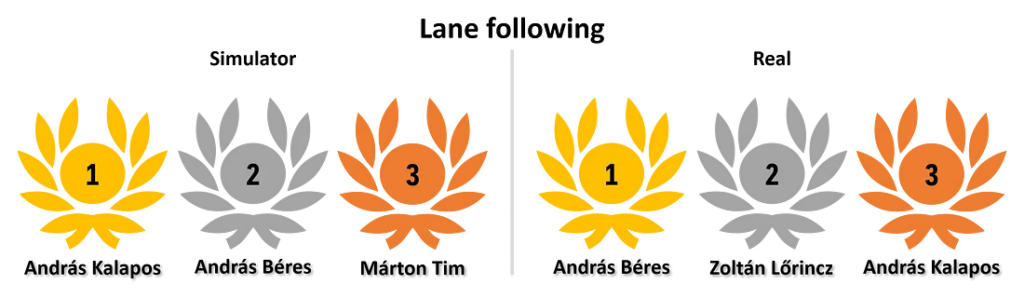

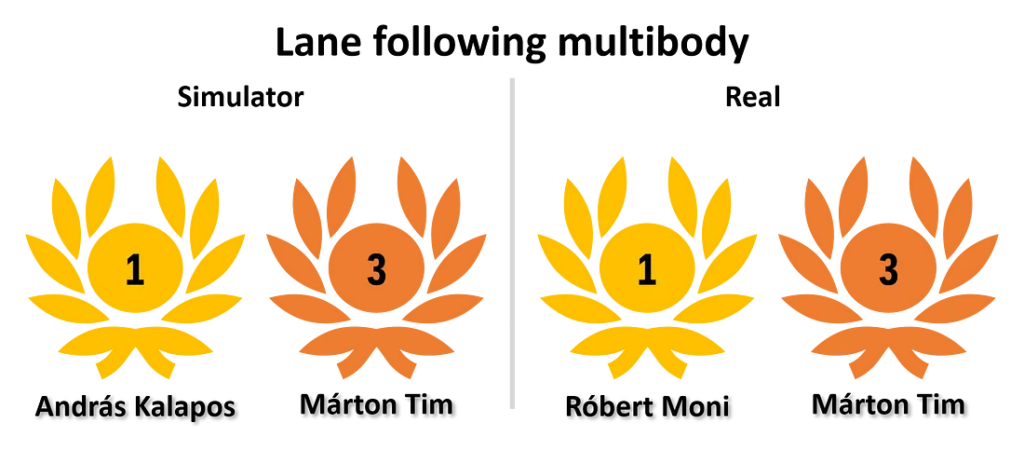

AI Driving Olympics 5th and 6th editions

In 2020 PIA students competed with 6 different solutions at the 5th edition of the AI Driving Olympics (AIDO) which was part of the 34th conference on Neural Information Processing Systems (NeurIPS). There was a total of 94 competitors with 1326 submitted solutions, thus we proudly announce that our team ranked top in 2 out of 3 challenges.

PIA students also competed in the 6th edition of AIDO with excellent results.